Exploring Visual Question Answering using Python

Introduction

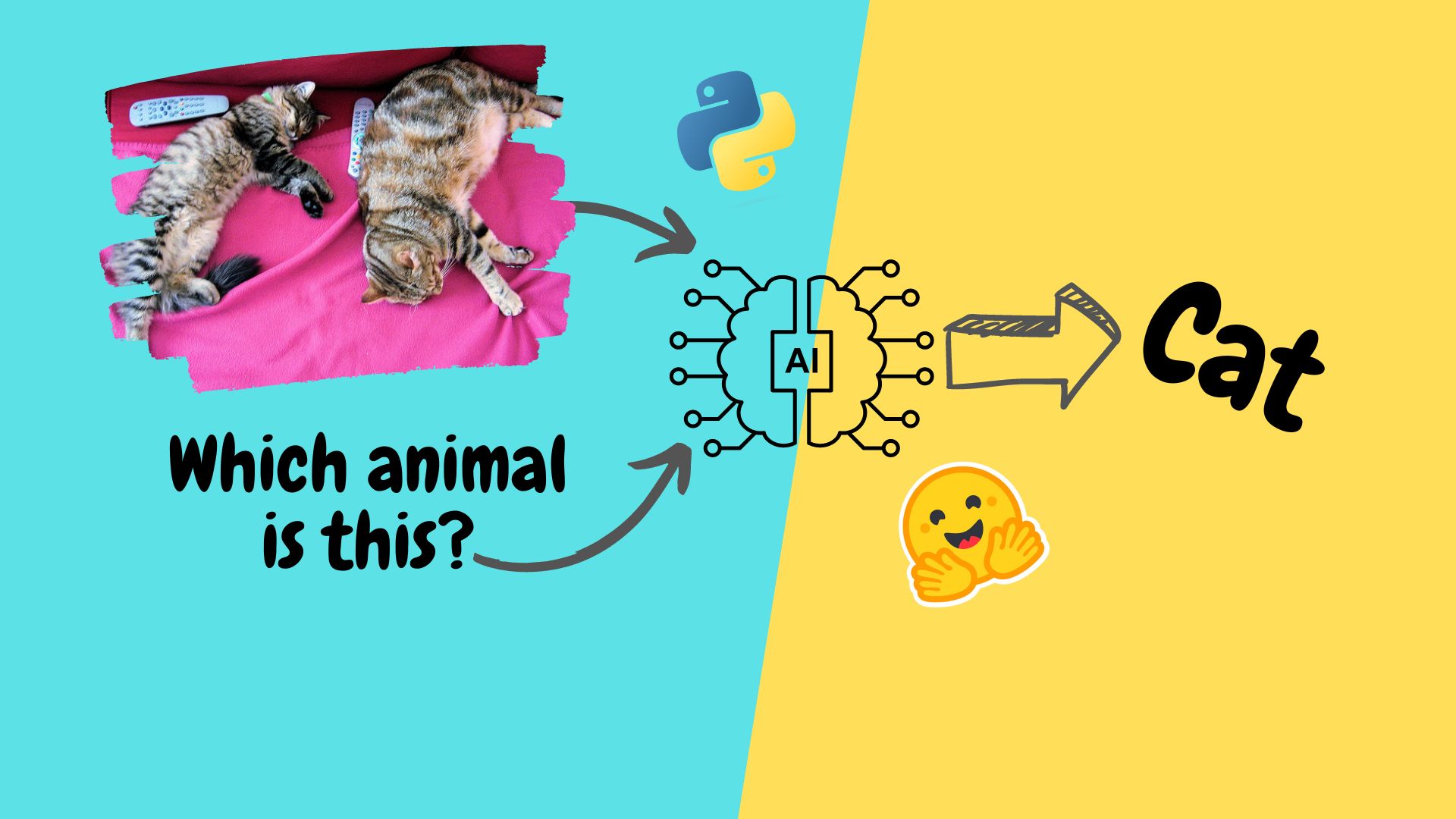

Visual Question Answering (VQA) is a subfield of artificial intelligence which aims at answering questions related to a picture.

For example, if you give the AI model a picture of a cat and ask questions related to it, the AI model will answer you correctly.

In this tutorial, we will use a free model available in Huggingface to perform VQA with Python. Don't be afraid if all this seems intimidating. We will guide you step-by-step.

𝗢𝘃𝗲𝗿𝘃𝗶𝗲𝘄

Now that we have a basic understanding of the model's capabilities, let's start coding.

𝗦𝗲𝘁𝘁𝗶𝗻𝗴 𝗨𝗽 𝘁𝗵𝗲 𝗘𝗻𝘃𝗶𝗿𝗼𝗻𝗺𝗲𝗻𝘁

Before coding, there are a few libraries you have to download.

To do that, open the command prompt in Windows (by typing cmd in start) or the terminal in Linux/mac.

Now type the following lines of code:pip install transformerspip install requestspip install pillow

𝗜𝗺𝗽𝗹𝗲𝗺𝗲𝗻𝘁𝗶𝗻𝗴 VQA

We are going to code in Python, So open your favourite text editor.

Note: The full code is provide at the end.

First, we need to import the necessary libraries. Let's do that!

from transformers import ViltProcessor, ViltForQuestionAnsweringimport requestsfrom PIL import Image

Now we have to use the Hugging Face function to load our VQA model. The model which we are going to use is dandelin/vilt-b32-finetuned-vqa. We can do that by

processor = ViltProcessor.from_pretrained("dandelin/vilt-b32-finetuned-vqa")model = ViltForQuestionAnswering.from_pretrained("dandelin/vilt-b32-finetuned-vqa")

Now let's fetch the image. Type the image's URL, and let's use Image.open() to open the image. url = "http://images.cocodataset.org/val2017/000000039769.jpg" # sample imageimage = Image.open(requests.get(url, stream=True).raw)

Now let's ask the question which you want to ask:text = 'Which animal is this?'

Now, we have to change the question into the correct format, and load it into the model.encoding = processor(image, text, return_tensors="pt")outputs = model(**encoding)logits = outputs.logitsidx = logits.argmax(-1).item()

We can now get the output by:print("Predicted answer:", model.config.id2label[idx])

Thus, we have our own VQA model in our hands.

Conclusion

You can put your favourite picture into this model, and ask it questions. But remember that this model is not perfect.

In fact, it is light-years away from perfection. So use it with caution.

Here is the whole code, for the copy pasters:)from transformers import ViltProcessor, ViltForQuestionAnsweringimport requestsfrom PIL import Imageprocessor = ViltProcessor.from_pretrained("dandelin/vilt-b32-finetuned-vqa")model = ViltForQuestionAnswering.from_pretrained("dandelin/vilt-b32-finetuned-vqa")url = "http://images.cocodataset.org/val2017/000000039769.jpg"image = Image.open(requests.get(url, stream=True).raw)text = 'Which animal is this?'encoding = processor(image, text, return_tensors="pt")outputs = model(**encoding)logits = outputs.logitsidx = logits.argmax(-1).item()print("Predicted answer:", model.config.id2label[idx])

If you have any questions, feel free to ask it in the comment section.

Note that the model and the code used are not ours, and are available in the Hugging Face library.

BibTeX entry and citation info

@misc{kim2021vilt,

title={ViLT: Vision-and-Language Transformer Without Convolution or Region Supervision},

author={Wonjae Kim and Bokyung Son and Ildoo Kim},

year={2021},

eprint={2102.03334},

archivePrefix={arXiv},

primaryClass={stat.ML}

}

Comments